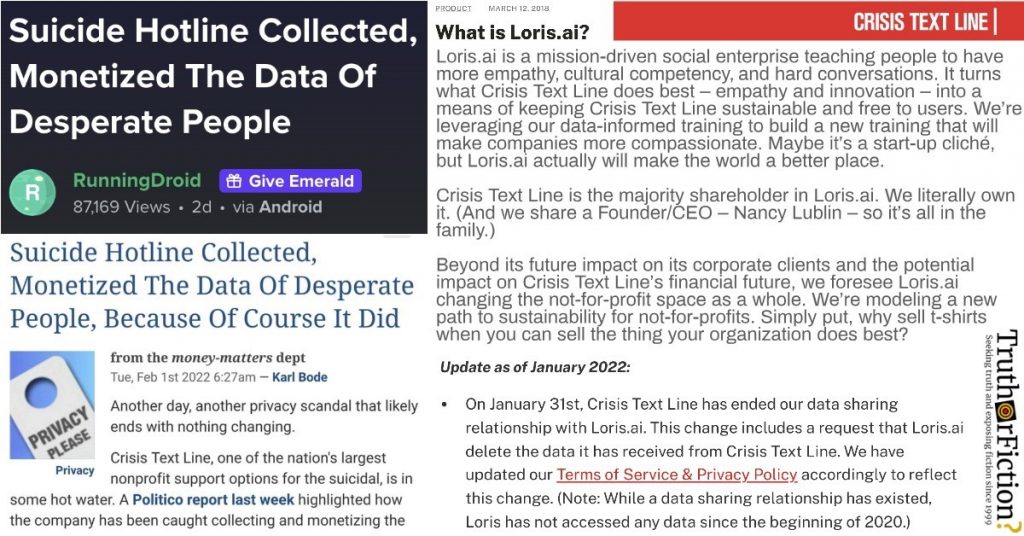

On February 1 2022, an Imgur user shared a screenshot under the title “Suicide Hotline Collected, Monetized The Data Of Desperate People,” showing an article or a blog post:

At the top of the screenshot, a byline showed that it was published on February 1 2022, and was thus recent when it was shared. Underneath the image, the poster linked to the a Techdirt.com report with a slightly longer headline: “Suicide Hotline Collected, Monetized The Data Of Desperate People, Because Of Course It Did.”

Fact Check

Claim: Suicide hotline Crisis Text Line "collected and monetized" callers’ data.

Description: The claim is that the Crisis Text Line, a suicide hotline, collected and monetized callers’ data.

Media Bias/Fact Check indicated that it rates Techdirt “High for factual reporting due to proper sourcing and a clean fact check record.”

Crisis Text Line was described as one of the largest hotlines of its type in the United States, in the piece, which began:

Another day, another privacy scandal that likely ends with nothing changing.

Crisis Text Line, one of the nation’s largest nonprofit support options for the suicidal, is in some hot water. A Politico report [in January 2022] highlighted how the company has been caught collecting and monetizing the data of callers… to create and market customer service software. More specifically, Crisis Text Line says it “anonymizes” some user and interaction data (ranging from the frequency certain words are used, to the type of distress users are experiencing) and sells it to a for-profit partner named Loris.ai. Crisis Text Line has a minority stake in Loris.ai, and gets a cut of their revenues in exchange.

As we’ve seen in countless privacy scandals before this one, the idea that this data is “anonymized” is once again held up as some kind of get out of jail free card … as we’ve noted more times than I can count, “anonymized” is effectively a meaningless term in the privacy realm. Study after study after study has shown that it’s relatively trivial to identify a user’s “anonymized” footprint when that data is combined with a variety of other datasets.

On January 28 2022, Politico published “Suicide hotline shares data with for-profit spinoff, raising ethical questions,” reporting:

Crisis Text Line is one of the world’s most prominent mental health support lines, a tech-driven nonprofit that uses big data and artificial intelligence to help people cope with traumas such as self-harm, emotional abuse and thoughts of suicide.

But the data the charity collects from its online text conversations with people in their darkest moments does not end there: The organization’s for-profit spinoff uses a sliced and repackaged version of that information to create and market customer service software.

Crisis Text Line says any data it shares with that company, Loris.ai, has been wholly “anonymized,” stripped of any details that could be used to identify people who contacted the helpline in distress. Both entities say their goal is to improve the world — in Loris’ case, by making “customer support more human, empathetic, and scalable.”

In turn, Loris has pledged to share some of its revenue with Crisis Text Line. The nonprofit also holds an ownership stake in the company, and the two entities shared the same CEO for at least a year and a half. The two call their relationship a model for how commercial enterprises can help charitable endeavors thrive.

It wasn’t immediately clear how the data-sharing arrangement between Crisis Text Line and Loris.ai came to light, but Politico suggested that it might have been their investigation:

After POLITICO began asking questions about its relationship with Loris, the nonprofit changed wording on its website to emphasize that “Loris does not have open-ended access to our data; it has limited contractual rights to periodically ask us for certain anonymized data.” Rodriguez said such sharing may happen every few months.

Politico also detailed potential matters of concern with the arrangement:

Ethics and privacy experts contacted by POLITICO saw several potential problems with the arrangement.

Some noted that studies of other types of anonymized datasets have shown that it can sometimes be easy to trace the records back to specific individuals, citing past examples involving health records, genetics data and even passengers in New York City taxis.

Others questioned whether the people who text their pleas for help are actually consenting to having their data shared, despite the approximately 50-paragraph disclosure the helpline offers a link to when individuals first reach out.

In the article, a passage from a Crisis Text Line blog post (“What is Loris.ai?”) appeared, originally published in March 2018. Its final three paragraphs said:

Loris.ai is a mission-driven social enterprise teaching people to have more empathy, cultural competency, and hard conversations. It turns what Crisis Text Line does best – empathy and innovation – into a means of keeping Crisis Text Line sustainable and free to users. We’re leveraging our data-informed training to build a new training that will make companies more compassionate. Maybe it’s a start-up cliché, but Loris.ai actually will make the world a better place.

Crisis Text Line is the majority shareholder in Loris.ai. We literally own it. (And we share a Founder/CEO – Nancy Lublin – so it’s all in the family.)

Beyond its future impact on its corporate clients and the potential impact on Crisis Text Line’s financial future, we foresee Loris.ai changing the not-for-profit space as a whole. We’re modeling a new path to sustainability for not-for-profits. Simply put, why sell t-shirts when you can sell the thing your organization does best?

The Imgur post was published on February 1 2022, as was the Techdirt article. A January 2022 update from Crisis Text Line was added to the top of the blog post quoted above:

Update as of January 2022:

- On January 31st, Crisis Text Line has ended our data sharing relationship with Loris.ai. This change includes a request that Loris.ai delete the data it has received from Crisis Text Line. We have updated our Terms of Service & Privacy Policy accordingly to reflect this change. (Note: While a data sharing relationship has existed, Loris has not accessed any data since the beginning of 2020.)

- Crisis Text Line founded Loris.ai and currently owns shares in the company.

- Loris.ai has their own independent leadership team and hired their own CEO, Etie Hertz, in August 2019. Nancy Lublin has not been the CEO of Crisis Text Line since June 2020.

- Crisis Text Line does not share personally identifiable data with Loris or any other company.

- Crisis Text Line shared scrubbed, anonymized data, issue tags, and some sections of our training materials with Loris — stripped of personally identifiable information from texters, volunteers, or any other person.

- Loris.ai does not have open access to our anonymized data; Loris must make a request for scrubbed, anonymized data from us, and Crisis Text Line retains discretion as to what is shared.

- Loris pays us to sublease office space, which is disclosed in our financials.

On February 1 2022, an Imgur user shared screenshots of a Techdirt article titled “Suicide Hotline Collected, Monetized The Data Of Desperate People, Because Of Course It Did.” Techdirt cited Politico’s January 28 2022 article about a data-sharing relationship between Crisis Text Line and Loris.ai, which was disclosed on Crisis Text Line’s blog as of March 2018. Crisis Text Line updated that blog post to state that as of January 31 2022, “Crisis Text Line has ended our data sharing relationship with Loris.ai,” adding that it submitted “a request that Loris.ai delete the data it has received from Crisis Text Line.” We have rated the claim Decontextualized, due to updates not present on the initial Imgur post.

- Suicide Hotline Collected, Monetized The Data Of Desperate People

- Suicide Hotline Collected, Monetized The Data Of Desperate People, Because Of Course It Did

- Techdirt | Media Bias Fact Check

- Suicide hotline shares data with for-profit spinoff, raising ethical questions

- What is Loris.ai? | Crisis Text Line